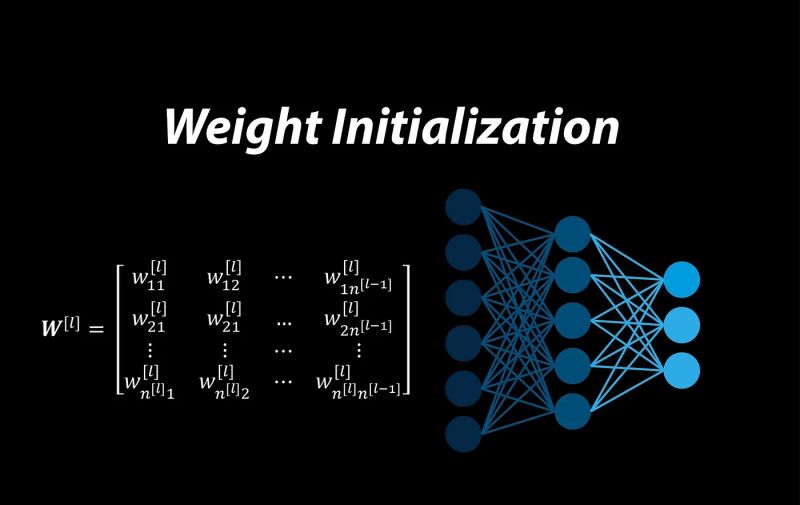

💡 𝗪𝗲𝗶𝗴𝗵𝘁 𝗜𝗻𝗶𝘁𝗶𝗮𝗹𝗶𝘇𝗮𝘁𝗶𝗼𝗻 in Deep Learning: What is it and why you should care about it ?

Let’s start with 𝘄𝗲𝗶𝗴𝗵𝘁𝘀.

Those 𝗳𝗹𝗼𝗮𝘁𝗶𝗻𝗴 𝗽𝗼𝗶𝗻𝘁 numbers which model learn during training and somehow encapsulates the magic of deep learning.

But, what’s the value of those floats when we start the training?

Should it be randomly set? Should it be kept at zero or one ? Will that be optimal? Will it help in faster convergence ?

Let’s find out.

Imagine you are in your garden planting seeds.

If you plant seeds too deep (weights too small), they might never sprout.

If you plant them too shallow (weights too large), they might sprout too fast but won’t grow strong roots.

The right depth ensures healthy growth—just like proper weight initialization ensures good learning.

Why it reminds me of 𝗚𝗼𝗹𝗱𝗶𝗹𝗼𝗰𝗸𝘀 🙂 Not too deep, not too shallow but just right.

𝗪𝗵𝘆 𝗪𝗲𝗶𝗴𝗵𝘁 𝗜𝗻𝗶𝘁𝗶𝗮𝗹𝗶𝘇𝗮𝘁𝗶𝗼𝗻 𝗠𝗮𝘁𝘁𝗲𝗿𝘀

At the beginning of training, weights need to be initialized to some starting values.

Why? Because if you don’t start with good initial values , bad things can happen 🙂

1️⃣ Vanishing Gradients: weights ttoo small can cause gradients to shrink exponentially as they backpropagate. This slows down learning, especially in the early layers.

2️⃣ Exploding Gradients: Conversely, weights that are too large can cause gradients to grow uncontrollably, leading to unstable training.

3️⃣ Symmetry Breaking: If all weights are initialized to the same value, neurons in the same layer will learn the same features, defeating the purpose of having multiple neurons.

4️⃣ Training Efficiency: Poor initialization can make the optimization process unnecessarily slow / suboptimal.

𝗪𝗵𝗮𝘁’𝘀 𝘁𝗵𝗲 𝗿𝗲𝗺𝗲𝗱𝘆?

Here are some common weight initialization techniques.

1️⃣ Random Initialization : Weights initialized randomly, typically using a uniform or normal distribution.

2️⃣ Xavier Initialization (Glorot Initialization) Adjusts the scale of random weights based on the number of input and output neurons.

3️⃣ He Initialization Similar to Xavier Initialization but scales weights based on the number of input neurons only.

4️⃣ LeCun Initialization Specifically tailored for sigmoid and tanh activations. Scales weights to maintain variance stability for these activation functions.

When in doubt, Use He Initialization for ReLU-based activations and

Xavier or LeCun for sigmoid/tanh activations.