💡𝗥𝗲𝗴𝘂𝗹𝗮𝗿𝗶𝘇𝗮𝘁𝗶𝗼𝗻 in 𝗗𝗲𝗲𝗽 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴: Intuition behind.

Regularization 101 definition : Model does well on training data and not so well on unseen data. Overfitting 🙂

But is there more to that, Let’s figure out.

Remember that one guy in school who memorized everything what is mentioned in books or uttered from teacher’s mouth. But didn’t perform well when questions were twisted a bit.

What happened?

He only memorised the lessons but didn’t understand the concept behind it to apply on previously unseen questions.

That’s 𝗼𝘃𝗲𝗿𝗳𝗶𝘁𝘁𝗶𝗻𝗴 and to correct that we need regularization.

Regularization acts as that good teacher, guiding the student to focus on core concepts rather than memorizing irrelevant details.

Regularization essentially solves 3 problems.

1️⃣ Overfitting: Prevents the model from fitting the noise or irrelevant details in the training data.

2️⃣ Model Complexity: Reduces the complexity of the model by constraining its capacity, ensuring it doesn’t overlearn.

3️⃣ Bias-Variance Tradeoff: Strikes a balance between underfitting (too simple) and overfitting (too complex).

So, how do we do regularization ?

Quite a few ways, actually.

Let’s see the most important ones. And let’s try to understand it without getting any maths involved. Shall we?

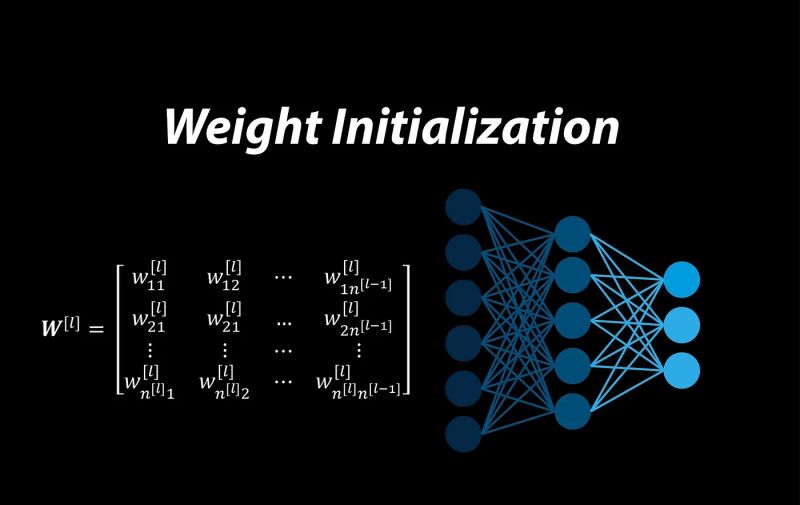

1️⃣L1 and L2 Regularization – Way to discourage large weights. A penalty term ensures that large weights are dampened. Penalty added to abosolute weight (L1) squared weights (L2)

2️⃣Dropout – Randomly “drops out” (sets to zero) a fraction of neurons during training. This forces the network to not overly rely on specific neurons and promotes generalization.

3️⃣ Data Augmentation – Why not give different variants of question to that friend so that he becomes really good at grasping concepts.

4️⃣ Early stopping – Before it starts memorising, stop your training.

5️⃣ Batch Norm – Normalise ( mean centre to 0 and variance 1) . Ensure, all neurons gets a fair chance in next layer.

6️⃣ Elastic Net – Combination of L1 and L2.